Practical Introduction to Information Theory

Practical Introduction to Information Theory, available at $44.99, has an average rating of 5, with 36 lectures, based on 4 reviews, and has 35 subscribers.

You will learn about Learn how to formulate problems as probability problems. Solve probability problems using information theory. Understand how information theory is the basis of a machine learning approach. Learn the mathematical basis of information theory. Identify the differences between the maximum likelihood theory approach and entropy approach Understand the basics of the use of entropy for thermodynamics. Calculate the molecular energy distributions using Excel. Learn to apply Information Theory to various applications such as mineral processing, elections, games and puzzles. Learn how Excel can be applied to Information Theory problems. This includes using Goal Seek and Excel Solver. Understand how a Logit Transform can be applied to a probability distribution. Apply Logit Transform to probability problems to enable Excel Solver to be successfully applied. Solve mineral processing mass balancing problems using information theory, and compare with conventional least squares approaches. This course is ideal for individuals who are This course is designed for: mathematicians seeking to learn information theory, physicists who are specifically interested in the relevance of entropy to thermodynamics, mineral processors and chemical engineers who are interested in modern analytical methods, machine learning experts who are interested on a machine learning approach based on information theory. or The course is particularly suited to graduating high school students seeking to enrol in Engineering or quantitative sciences. It is particularly useful for This course is designed for: mathematicians seeking to learn information theory, physicists who are specifically interested in the relevance of entropy to thermodynamics, mineral processors and chemical engineers who are interested in modern analytical methods, machine learning experts who are interested on a machine learning approach based on information theory. or The course is particularly suited to graduating high school students seeking to enrol in Engineering or quantitative sciences.

Enroll now: Practical Introduction to Information Theory

Summary

Title: Practical Introduction to Information Theory

Price: $44.99

Average Rating: 5

Number of Lectures: 36

Number of Published Lectures: 36

Number of Curriculum Items: 36

Number of Published Curriculum Objects: 36

Original Price: $24.99

Quality Status: approved

Status: Live

What You Will Learn

- Learn how to formulate problems as probability problems.

- Solve probability problems using information theory.

- Understand how information theory is the basis of a machine learning approach.

- Learn the mathematical basis of information theory.

- Identify the differences between the maximum likelihood theory approach and entropy approach

- Understand the basics of the use of entropy for thermodynamics.

- Calculate the molecular energy distributions using Excel.

- Learn to apply Information Theory to various applications such as mineral processing, elections, games and puzzles.

- Learn how Excel can be applied to Information Theory problems. This includes using Goal Seek and Excel Solver.

- Understand how a Logit Transform can be applied to a probability distribution.

- Apply Logit Transform to probability problems to enable Excel Solver to be successfully applied.

- Solve mineral processing mass balancing problems using information theory, and compare with conventional least squares approaches.

Who Should Attend

- This course is designed for: mathematicians seeking to learn information theory, physicists who are specifically interested in the relevance of entropy to thermodynamics, mineral processors and chemical engineers who are interested in modern analytical methods, machine learning experts who are interested on a machine learning approach based on information theory.

- The course is particularly suited to graduating high school students seeking to enrol in Engineering or quantitative sciences.

Target Audiences

- This course is designed for: mathematicians seeking to learn information theory, physicists who are specifically interested in the relevance of entropy to thermodynamics, mineral processors and chemical engineers who are interested in modern analytical methods, machine learning experts who are interested on a machine learning approach based on information theory.

- The course is particularly suited to graduating high school students seeking to enrol in Engineering or quantitative sciences.

Section 1 Introduction

Information theory is also called ‘the method of maximum entropy’. Here the title ‘information theory’ is preferred as it is more consistent with the course’s objective – that is to provide plausible deductions based on available information.

Information theory is a relatively new branch of applied and probabilistic mathematics – and is relevant to any problem that can be described as a probability problem.

Hence there are two primary skills taught in this course.

1. How to formulate problems as probability problems.

2. How to then solve those problems using information theory.

Section 1 largely focuses on the probabilistic basis of information theory.

Lecture 1 Scope of Course

In this lecture we discuss the scope of the course. Whilst section 1 provides the probabilistic basis of information theory the following sections are:

2. The thermodynamic perspective

3. Mineral Processing Applications

4. Other examples

5. Games and puzzles

6. Close

Information theory is a foundational approach to a particular approach of machine learning. The scope of this course does not go deeply into machine learning but does provide some insight into how information theory is the basis of machine learning.

Lecture 2 Combinatorics as a basis for Probability Theory

This course does not aim to be a probability course – and only provides a probability perspective relevant to information theory.

In this lecture you will learn basic probability notation and formula.

By applying the exercises you will develop understanding of the fundamental functions such as factorial and combinatorial.

Lecture 3 Probability Theory

In this lecture you will learn basic probability theory with primary focus being the binomial distribution. Here probability theory is based on first starting with single-event probabilities to estimate the probabilities of multiple-events. Here the single-event probabilities are equitable; i.e. the probability of choosing a particular coloured ball is 50%.

By applying the exercises, you will solve simple multiple-event probability problems building on the functions learnt in the previous lecture.

Lecture 4 Non-equitable Probability

In the previous lecture the focus was on the binomial distribution where probabilities of single events are equal. Here we focus on the binomial distribution where single-event probabilities are unequal. i.e. the probability of choosing a particular type of coloured ball is no longer 50%.

By completing the exercises, you will solve more complex probability problems than previously. These problems require you to calculate multi-event probabilities where probabilities of single events are not equitable.

Lecture 5 Introduction to Information Theory

In this lecture you will learn the mathematical basis of information theory. In particular, the mathematical definition of entropy (as used in the course) is defined.

By applying the exercises, you will understand the entropy function and how its value changes as a function of probability. You will identify that the entropy is 0 for probability values of 0 and 1.

Lecture 6 Relative Entropy

The ‘pure’ form of entropy is based on equitable probabilities. Relative entropy is based on non-equitable probabilities. The implication of this approach is that one can use “prior probabilities”. In contrast, entropy uses “ignorant priors”. You will therefore learn that you can use available information as a prior, which in turn leads to more plausible solutions (than not using available information). This is the main reason why the course uses the expression ‘Information theory’ rather than “maximum entropy”.

In the exercise you will recognise how the relative entropy function is slightly different to the entropy function. In particular, the relative entropy is maximised when the probability equals the prior probability.

Lecture 7 Bayes Work

Most probability theory is based on forward modelling. That is, given a scenario, what will be the outcome? Bayes considered the problem of probabilistic inverse modelling. That is, look at the outcome to determine what was the initial scenario.

Lecture 8 – Simple Application of Entropy – Dice throwing.

In this lecture you will be given a simple application. That application is a biased dice. Using Excel Solver and information theory you need to calculate the most likely probability of a ‘six’ (given the dice is biased). The lecture introduces Lagrange multipliers for constrained optimisation.

Lecture 9 Statistical Concepts

Entropy is fundamentally based on the binomial distribution. The most common distribution used in statistics is the normal distribution (or Gaussian distribution). An understanding of how the binomial distribution relates to the normal distribution is of value. If we use the normal distribution as an approximation to the binomial distribution and then consider the entropy of this normal distribution, we derive a simple quadratic function. A quadratic function has advantages such as, in some cases, it is easier for Excel Solver to determine the maximum entropy for a constrained problem.

Here we compare the two approaches: binomial versus normal; and consider the strengths and weakness of the two approaches.

In the exercise you will chart the entropy as a function of probability for both the binomial distribution and the Gaussian distribution, and you will understand that for small departures from the prior, the two methods are very similar.

Lecture 10 Comparison of Maximum Likelihood Theory to Entropy

Maximum likelihood theory is largely a statistical concept whereas entropy is a probabilistic concept. Yet for some applications, the solution is focused on a maximum likelihood approach where an entropy approach could have been used as an alternative. Hence in this lecture you will learn the difference between the two approaches.

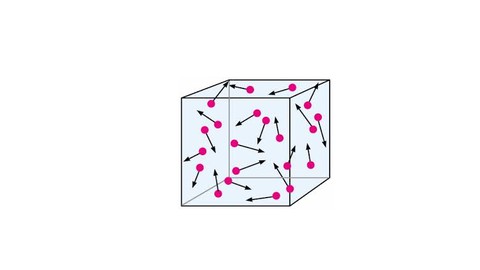

Section 2 The Thermodynamic Perspective of Entropy

Entropy as a word was first defined by physicists. Consequently, there is considerable confusion as to whether an understanding of entropy implies one should understand thermodynamics. For this reason, this course is defined as “Information Theory” rather than maximum entropy. However, entropy is used in thermodynamics – and is indeed related to entropy as used in information theory.

In this section the use of entropy by physicists is discussed, as well as how its use in thermodynamics relates to wider applications.

This section provides an introduction into the use of entropy for thermodynamics (and the basis for statistical mechanics) but is not a comprehensive course in thermodynamics.

Lecture 11 Thermodynamic Perspective

This lecture largely focuses on the historical basis of entropy as used in thermodynamics. It leads to the emergence to the branch of mathematical physics called statistical mechanics.

Lecture 12 Calculate Molecular Energy Distribution.

In this lecture the mathematical basis of molecular energy distributions is presented.

Lecture 13 Practical Application of Thermodynamics

In this lecture you will calculate the molecular energy distributions using Excel. You will solve the problem using Goal Seek.

Lecture 14 Reconciling Thermodynamics with Information Theory.

This lecture summaries the thermodynamics using the information theory approach – with the intent that you will understand that ‘entropy’ in this course is a probabilistic concept only – and whilst IT has application to thermodynamics it does not require a deep understanding of thermodynamics to solve the many problems in information theory.

Section 3 Mineral Processing Applications

The lecturer was a researcher in mineral processing for some 20 years. He recognised that many mineral processing problems could be solved using information theory. In this section a set of mineral processing problems are given:

-

Comminution

-

Conservation of assays

-

Mass Balancing

-

Mineral To Element

-

Element to mineral

The section also discusses advanced methods such as:

-

collating entropy functions in a VBA addin,

-

and using the logit transform to enable Excel Solver to solve problems that it would otherwise not be able to solve.

Although the applications are mineral processing the applications will be understandable to you even if unfamiliar with mineral processing.

Lecture 15 Overview of Examples

The course now focuses on a wider set of applications than thermodynamics. Here an overview of applications discussed in the course is given. The lecture also discusses some applications of information theory that are not within the scope of the course.

There are four groups of applications:

-

Thermodynamics

-

Mineral processing (which is also relevant to chemical engineering)

-

Other examples (such as elections)

-

Games and puzzles

Lecture 16 Application to Comminution

The first mineral processing example is comminution (which is breakage of particles).

In this lecture entropy is used as a predictive methodology to estimate the size distribution of particles, if particles are broken to a known average size.

Lecture 17 Comminution Exercise

In this lecture you will apply the methods of the previous lecture using Excel. You will learn what is meant by a feed size distribution and what is meant by a product size distribution. The product size distribution can be considered a probability distribution, and the product size distribution before breaking particles to a smaller size is considered as a prior distribution. Hence the approach uses relative entropy.

Lecture 18 Conservation of Assays

In mineral processing, there are ‘assays’ which are measures of either the elements or minerals. Here we focus on elements.

In this lecture you will perform a simple mass balance (or reconciliation) to ensure assays are conserved.

That is, when the particles are broken to a smaller size, if the assays for each size-class are considered the same as in the initial product size distribution then there is an inconsistency in the overall content of mineral. That is the assays need to be ‘reconciled’. Using information theory (relative entropy) the assays of the product are adjusted.

This is an example of applying information theory as a number of subproblems. Subproblem 1 is adjusting the size distribution; Subproblem 2 is adjusting the assays.

Lecture 19 Creating Entropy Addins

This far, all formulae are inputted via front-end Excel. However as the Excel functions become more complex it is both tedious and inefficient to type them in from scratch. In this lecture you are shown functions that are compiled within an addin, and then how to install them in a workbook.

If you are familiar with VBA you can explore the functions in detail.

Lecture 20 Mass Balancing

Mass balancing (or data reconciliation) is the method used in chemical engineering and mineral processing to adjust experimental data so that they are consistent. In this lecture you will learn how this problem can be solved using information theory. And how this approach is easier than the conventional method.

Lecture 21 Mass Balancing Excel

Using Excel, you will seek to solve a mass balance problem; and it will be shown that Excel Solver is not able to solve the problem as is. It may seem strange to have a lecture where the exercise does not solve the problem satisfactorily. However from this exercise you will learn more about the strengths and weaknesses of Excel Solver. In later lectures you will learn how to adjust the problem so that the solution can be determined using Excel Solver.

Lecture 22 Logit Transform

The logit transform is a method to convert the probability from the region [0 ,1] to a variable in the region [-∞, ∞]. Conversely the inverse logit transform converts a variable from the region [-∞, ∞] to the region [0 ,1]. This transform allows probability problems to be more easily solved using Excel Solver.

Lecture 23 Logit Transform Exercise

You will recontinue the problem given in Lecture 21 by using the logit transform.

The transformed variables are called Z, and these variables are adjusted via Excel Solver to maximise the entropy.

Lecture 24 Mineral To Element

Mineral to element is not specifically an application of information theory – it provides the basis for the latter information theory problem of element to mineral.

In the exercise you will take a distribution of minerals and from that calculate the distribution of elements. You will learn how to do this using two methods:

1. Conventional Sumproduct

2. Using matrix equations.

The second step is mainly suitable for people with mathematical knowledge of matrices; but the exercise is still suitable for you if you have not had previous exposure to matrices.

Lecture 25 Correcting Mineral Compositions based on Assays

This lecture focuses on the inverse problem of Lecture 24. That is using elements to calculate minerals. Here the participant is reintroduced to the issues of problems being:

-

Ill-posed (non-unique solution)

-

Well-posed (unique solution)

-

Over-posed (more information than is required).

The problem of element to mineral (as given in the exercise) is an ill-posed problem, and information theory is applied by using any previous mineral distribution as a prior.

In the exercise you will calculate the mineral composition given the elements.

Section 4 Other Applications

In this section there are various lectures which are diverse and could not be pooled as distinct sessions. Although there is focus on these applications there are a number of new concepts introduced:

-

The use of weighting by number

-

Dealing with network systems.

Lecture 26 Elections

Elections can be considered as a probability problem. Consequently, if we can predict the general swing – we can use information theory to estimate the results for each electorate.

Lecture 27 Elections Exercise

In this exercise you will apply the knowledge of the previous lecture to predict the outcome of an election. You will learn further skills using Goal Seek.

In the exercise you will identify the required swing in support for a party to win the election. You will also predict the results for each electorate. However, there is a problem with the way entropy is used in the exercise which is discussed in later lectures.

Lecture 28 The Problem of Weighting

For entropy problems thus far, the entropy has largely been based on the presumption that the total number can be ignored. In this lecture it is explained that ignoring the number is a fallacy. The exercise demonstrates that by incorporating the number in the entropy more realistic solutions can be obtained.

Lecture 29 Weighting Exercise

You will return to the Mass Balance problem and ensure that the entropy is weighted by solid flow, yielding results that are more plausible.

Lecture 30 Network Problems

A network problem is one where the problem can be visualised by a flowchart and objects go through various processes.

It is shown that information theory has a paradox when it comes to network problems. To overcome the problem a new syntax is presented where variables are differentiable in some equations – but not differentiable in other equations.

In the exercise you will understand the paradox via a simple network problem.

Section 5 Games and Puzzles

In this section we apply information theory to games and puzzles. Currently this section focuses on 2 games: Mastermind and Cluedo. You will identify that these 2 games are completely suitable for information theory and you’ll set up solutions to these games as probabilistic systems.

Lecture 31 Mastermind – explanation

Mastermind is a logic game for two or a puzzle for one. It involves trying to crack a code. In this lecture the puzzle is explained so that successive lectures can focus on using information theory to solve Mastermind.

In this exercise you will play the game mastermind in order to be familiar with the game for later lectures.

Lecture 32 Applying Information Theory to Mastermind

Mastermind is a perfect example of the application of information theory. Here you will learn the methodology for solving Mastermind. The puzzle involves using ‘trials’ from which information is obtained about a hidden code. Information theory is applied using the new information to update the probabilities.

In the exercise you will set up the Mastermind problem using a probabilistic system.

Lecture 33 Mastermind -Information Theory Exercise

Having set up Mastermind using a probabilistic system in Excel, you will use Excel Solver to identify the solution.

Lecture 34 Playing Cluedo using Information Theory

Cluedo is a ‘murder’ board game – but is based on logic. You will learn how to use information theory to solve the murder before any other player. The exercise only focuses on setting up the problem as the solution approach is analogous to Mastermind.

Section 6. Close

You will be reminded of what was covered in the course. You will consider further applications. You will learn the background to the course and mineral processing problems suited to Information Theory. Future and potential courses will be discussed.

Lecture 35 Closing Remarks

In this lecture a brief summary of the course is given, as well as acknowledgements and references.

Course Curriculum

Chapter 1: Introduction

Lecture 1: Scope of Course

Lecture 2: Combinatorics as a basis for probability theory

Lecture 3: Probability Theory

Lecture 4: Non-equitable Probability

Lecture 5: Introduction to Information Theory

Lecture 6: Relative Entropy

Lecture 7: Bayes Work

Lecture 8: Simple Application of Entropy – Dice throwing.

Lecture 9: Statistical Concepts

Lecture 10: Comparison of Maximum Likelihood Theory to Entropy

Chapter 2: The Thermodynamic Perspective of Entropy

Lecture 1: Thermodynamic Perspective

Lecture 2: Calculate Molecular Energy Distribution.

Lecture 3: Practical Application of Thermodynamics

Lecture 4: Reconciling Thermodynamics with Information Theory

Chapter 3: Mineral Processing Applications

Lecture 1: Overview of Examples

Lecture 2: Application to Comminution

Lecture 3: Comminution Exercise

Lecture 4: Conservation of Assays

Lecture 5: Creating Entropy Addins

Lecture 6: Mass Balancing

Lecture 7: Mass Balancing Excel

Lecture 8: Logit Transform

Lecture 9: Logit Transform Exercise

Lecture 10: Mineral To Element

Lecture 11: Correcting Mineral Compositions based on Assays

Chapter 4: Other Applications

Lecture 1: Elections

Lecture 2: Elections Exercise

Lecture 3: The Problem of Weighting

Lecture 4: Weighting Exercise

Lecture 5: Network Problems

Chapter 5: Games and Puzzles

Lecture 1: Mastermind – explanation

Lecture 2: Applying Information Theory to Mastermind

Lecture 3: Mastermind -Information Theory Exercise

Lecture 4: Playing Cluedo using Information Theory

Chapter 6: Close

Lecture 1: Closing Remarks

Lecture 2: Bonus

Instructors

-

Stephen Rayward

Principal, Midas Tech International

Rating Distribution

- 1 stars: 0 votes

- 2 stars: 0 votes

- 3 stars: 0 votes

- 4 stars: 0 votes

- 5 stars: 4 votes

Frequently Asked Questions

How long do I have access to the course materials?

You can view and review the lecture materials indefinitely, like an on-demand channel.

Can I take my courses with me wherever I go?

Definitely! If you have an internet connection, courses on Udemy are available on any device at any time. If you don’t have an internet connection, some instructors also let their students download course lectures. That’s up to the instructor though, so make sure you get on their good side!

You may also like

- Best Emotional Intelligence Courses to Learn in March 2025

- Best Time Management Courses to Learn in March 2025

- Best Remote Work Strategies Courses to Learn in March 2025

- Best Freelancing Courses to Learn in March 2025

- Best E-commerce Strategies Courses to Learn in March 2025

- Best Personal Branding Courses to Learn in March 2025

- Best Stock Market Trading Courses to Learn in March 2025

- Best Real Estate Investing Courses to Learn in March 2025

- Best Financial Technology Courses to Learn in March 2025

- Best Agile Methodologies Courses to Learn in March 2025

- Best Project Management Courses to Learn in March 2025

- Best Leadership Skills Courses to Learn in March 2025

- Best Public Speaking Courses to Learn in March 2025

- Best Affiliate Marketing Courses to Learn in March 2025

- Best Email Marketing Courses to Learn in March 2025

- Best Social Media Management Courses to Learn in March 2025

- Best SEO Optimization Courses to Learn in March 2025

- Best Content Creation Courses to Learn in March 2025

- Best Game Development Courses to Learn in March 2025

- Best Software Testing Courses to Learn in March 2025